Google AI Prompt Engineering Best Practices: 12 Key Techniques from 2025 White Pape

1. Provide examples

One-shot/few-shot: You can provide a single example or multiple examples.

Providing examples allows the model to analyze and capture the characteristics of the results that are similar to the examples, achieving better outcomes.

Example 1: Product description generation (Few-shot)

Prompt:

Write an e-commerce description for a new Bluetooth headset based on the example:

Example product: Smartwatch

Features: 50m water resistance/2-week battery life/blood oxygen detection

Copy: Challenge the depths of 50 meters, accompanying you to explore the underwater world. Long-lasting battery life of 14 days, continuous health monitoring day and night...

New product: Wireless noise-canceling headphones

Features: 40dB active noise cancellation/30-hour battery life/spatial audio

Effect explanation: The example demonstrates how to transform technical parameters into scenario-based descriptions. The model will imitate the three-part structure of "technical specifications + usage scenarios + emotional resonance."

Example 2: Poetry creation (One-shot)

Prompt:

Please create a seven-character quatrain about autumn in the style of the example:

Example:

枫红稻黄秋意浓,雁阵南飞破长空。

闲坐庭前观叶落,一壶清茶伴�金风。

New work:

Effect explanation: The example provides the tone pattern, rhyme scheme, and typical imagery. The model will inherit the use of seasonal elements, the structure of parallelism, and the creation of classical意境 (artistic conception).

2. Design simple.

Keep it simple, recommend using verbs, avoid using obscure expressions.

Try using action verbs. Here are some examples:

Act, analyze, categorize, compare, create, describe, define, evaluate, extract, find, generate, identify, list, measure, organize, parse, select, predict, provide, sort, recommend, return, retrieve, rewrite, choose, present, sort, summarize, translate, write.

3. Be specific about the output

Try to ask the model questions in a clear and concise manner, just like communication in life. If you express yourself too vaguely and circle around ideas without getting to the point, communication will be blocked, and you may not get the answers you want.

Be straightforward: what exactly do you need? Not just the final goal but also the "atmosphere" before delivering it.

DO:

Generate a 3 paragraph blog post about the top 5 video game consoles. The blog post should be informative and engaging, and it should be written in a conversational style.

DO NOT:

Generate a blog post about video game consoles.

4. Use Instructions over Constraints

Instructions and constraints are used in prompting to guide the output of a LLM.

- An instruction provides explicit instructions on the desired format, style, or content of the response. It guides the model on what the model should do or produce.

- A constraint is a set of limitations or boundaries on the response. It limits what the model should not do or avoid.

This approach aligns with how humans prefer positive instructions over lists of what not to do.

DO:

Generate a 1 paragraph blog post about the top 5 video game consoles. Only discuss the console, the company who made it, the year, and total sales.

DO NOT:

Generate a 1 paragraph blog post about the top 5 video game consoles. Do not list video game names.

5. Control the max token length

In some scenarios, we don't want the model to output too much content because certain situations, such as titles and descriptions, have character limits. Therefore, we should actively limit the model's output.

"Explain quantum physics in a tweet-length message."

"Limit the output to within 80 characters."

6. Use variables in prompts

This application is inspired by programming, where we proactively identify variable parts in the model and write what we want them to change when using prompts to achieve a broader range of prompt applications.

![[2025-02-05-img-3-Reading-TechAI-PromptEngineering-Google-whitepaper_Prompt Engineering_v4-Content-Best Practices-Use variables in prompts.png]]

7. Experiment with input formats and writing styles

"Testing! Testing!! Testing!!!

Different models, model configurations, prompt formats, word choices, and submission methods can lead to different results. It is important to experiment with prompt attributes such as style, word choice, and prompt type (zero-shot, few-shot, system prompts).

A prompt aimed at generating text about the revolutionary game console Sega Dreamcast can be phrased as a question, statement, or instruction, leading to different outputs:

- Question: What is the Sega Dreamcast, and why is it such a revolutionary gaming console?

- Statement: The Sega Dreamcast is a sixth-generation video game console released by Sega in 1999. It...

- Instruction: Write a paragraph describing the Sega Dreamcast gaming console and explain why it is so revolutionary."

8. For few-shot prompting with classification tasks, mix up the classes

When using few-shot prompting for classification tasks, make sure the examples provided are not in a fixed order but mixed together, allowing the model to learn patterns rather than the sequence of examples.

Example 1: Sentiment Classification

Incorrect Prompt (Fixed Order)

Text: "The visual effects in this movie were stunning!" → Sentiment: Positive

Text: "Great service and elegant environment." → Sentiment: Positive

Text: "Complete waste of my time." → Sentiment: Negative

Please classify: "The character development was very shallow." →

Issue: Providing consecutive samples of the same category might lead the model to learn incorrect patterns like "the third sentence is always negative."

Correct Prompt (Mixed Categories)

Text: "The character development was very shallow." → Sentiment: Negative

Text: "The cinematography was poetic" → Sentiment: Positive

Text: "The plot had too many holes" → Sentiment: Negative

Please classify: "The soundtrack perfectly matched the story's atmosphere" →

Effect: Forces the model to focus on language patterns (like negative keywords "shallow" "holes") rather than memorizing sequence.

Example 2: Topic Classification

Incorrect Prompt

Article Title: "Mars Rover Discovers Organic Molecules" → Category: Technology

Article Title: "New Quantum Computing Breakthrough" → Category: Technology

Article Title: "Golden State Warriors Win Championship" → Category: Sports

Please classify: "AI Model Diagnoses Cancer with 95% Accuracy" →

Issue: The overly structured tech/tech/sports pattern might make the model focus on numerical positions.

Correct Prompt

Article Title: "Gene-Editing Therapy Approved for Clinical Trials" → Category: Technology

Article Title: "NBA Finals MVP Announced" → Category: Sports

Article Title: "Blockchain Voting System Tested in Estonia" → Category: Technology

Please classify: "World Cup Qualifiers Schedule Released" →

9. Adapt to model updates

Upgrade models and optimize prompts. Use updated models to achieve better base model results, and continuously optimize prompts.

10. Experiment with output formats

- JSON format is convenient for processing

- By requiring JSON format through prompts, force the model to follow structured requirements and limit model hallucination content

Input

Classify movie reviews as positive, neutral or negative. Return valid JSON:

Review: "Her" is a disturbing study revealing the direction humanity is headed if AI is allowed to keep evolving, unchecked. It's so disturbing I couldn't watch it.

Schema:

"""

MOVIE:

{

"sentiment": String "POSITIVE" | "NEGATIVE" | "NEUTRAL",

"name": String

}

MOVIE REVIEWS:

{

"movie_reviews": [MOVIE]

}

"""

JSON Response:

Output

{

"movie_reviews": [

{

"sentiment": "NEGATIVE",

"name": "Her"

}

]

}

11. CoT Best Practices - Chain of Thought

Since chain of thought generally solves problems with only one standard answer and has rigorous requirements, temperature should be set to 0.

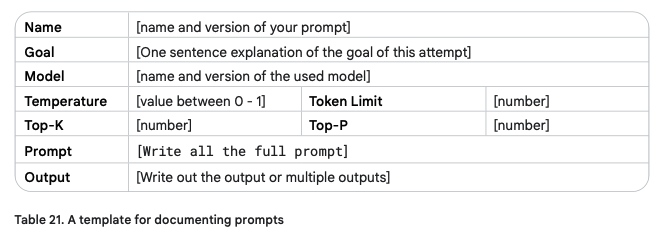

12. Record Various Prompt Attempts

When building a Retrieval-Augmented Generation (RAG) system, you also need to record which factors in the RAG system influenced the insertion of content into the prompt - this includes your queries, chunking settings, chunk outputs, and other relevant information.